World's First

Microprocessor

a 20-bit, pipelined, parallel multi-microprocessor chip set for the greatest fighter jet the USA has ever flown

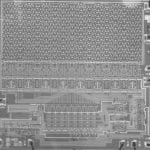

- Read Only Memory (ROM)

- 20-bit Parallel Multipler

- 20-bit Parallel Divider

- Steering Multiplexer

- Random Access Memory (RAM)

- CPU / Special Logic

The F-14 “Tomcat” First Microprocessor

20-bit, pipelined, parallel processors, multi-processing, in-flight self-tested, dual-redundant system

The World's First Microprocessor was designed and developed from 1968-1970. This site describes the design work for a MOS-LSI, highly integrated, microprocessor chip set designed starting June 1968 and completed by June 1970. This highly integrated computer chip set was designed for the US Navy F14A “TomCat” fighter jet by Mr. Steve Geller and Mr. Ray Holt as part of a design team while working for Garrett AiResearch Corp under contract from Grumman Aircraft, the prime contractor for the US Navy. The MOS-LSI chips, called the MP944, were manufactured by American Microsystems, Inc of Santa Clara, California.

The MOS-LSI chip set was part of the Central Air Data Computer (CADC) which had the function of controlling the moving surfaces of the aircraft and the displaying of pilot information. The CADC received input from five sources, 1) static pressure sensor, dynamic pressure sensor, analog pilot information, temperature probe, and digital switch pilot input. The output of the CADC controlled the moving surfaces of the aircraft. These were the wings, maneuver flaps, and the glove vane controls. The CADC also controlled four cockpit displays for Mach Speed, Altitude, Air Speed, and Vertical Speed. The CADC was a redundant system with real-time self-testing built-in. Any single failure from one system would switch over to the other.

Two state-of-the-art quartz sensors, a 16-bit high precision analog-to-digital converter, a 16-bit high precision digital-to-analog converter, the MOS-LSI chip set, and a very efficient power unit made up the complete CADC. A team of over 25 managers, engineers, programmers, and technicians from AiResearch and American Microsystems labored for two years to accomplish a design feat never before attempted, a complete state-of-the-art, highly integrated, digital air data computer. Previous designs were based around mechanical technology, consisting of precision gears and cams. Standard technology, used commercially for the next five years, designed for the rugged military environment allowed this feat to be accomplished.

In 1971, Mr. Ray Holt wrote a design paper on the MOS-LSI chip set design which was approved for publication by Computer Design magazine. However, because of national security reasons the U.S. Navy would not approve this paper for publication. Mr. Holt attempted again in 1985 to have the paper cleared and the answer again was no. Finally, in April 1997, he started the process again and this time was able to receive clearance for publication as of April 21, 1998.

The entire contents of this original 1971 paper, “Architecture Of A Microprocessor“, is made available here. The first public announcement of the F14A MOS-LSI microprocessor chip set was a published article by the Wall Street Journal on September 22, 1998. This paper and the details of the design were first presented publicly by Mr. Ray Holt at the Vintage Computer Festival held at the Santa Clara Convention Center on September 26-27, 1998.

For those historians that like claims I respectively submit the following claims on the F14 MP944 microprocessor:

1st microprocessor chip set

1st aerospace microprocessor

1st fly-by-wire flight computer

1st military microprocessor

1st production microprocessor

1st fully integrated chip set microprocessor

1st 20-bit microprocessor

1st microprocessor with built-in programmed self-test and redundancy

1st microprocessor in a digital signal (DSP) application

1st microprocessor with execution pipeline

1st microprocessor with parallel processing

1st microprocessor with integrated math co-processors

1st Read-Only Memory (ROM) with a built-in counter

Link to this page

Link to this page